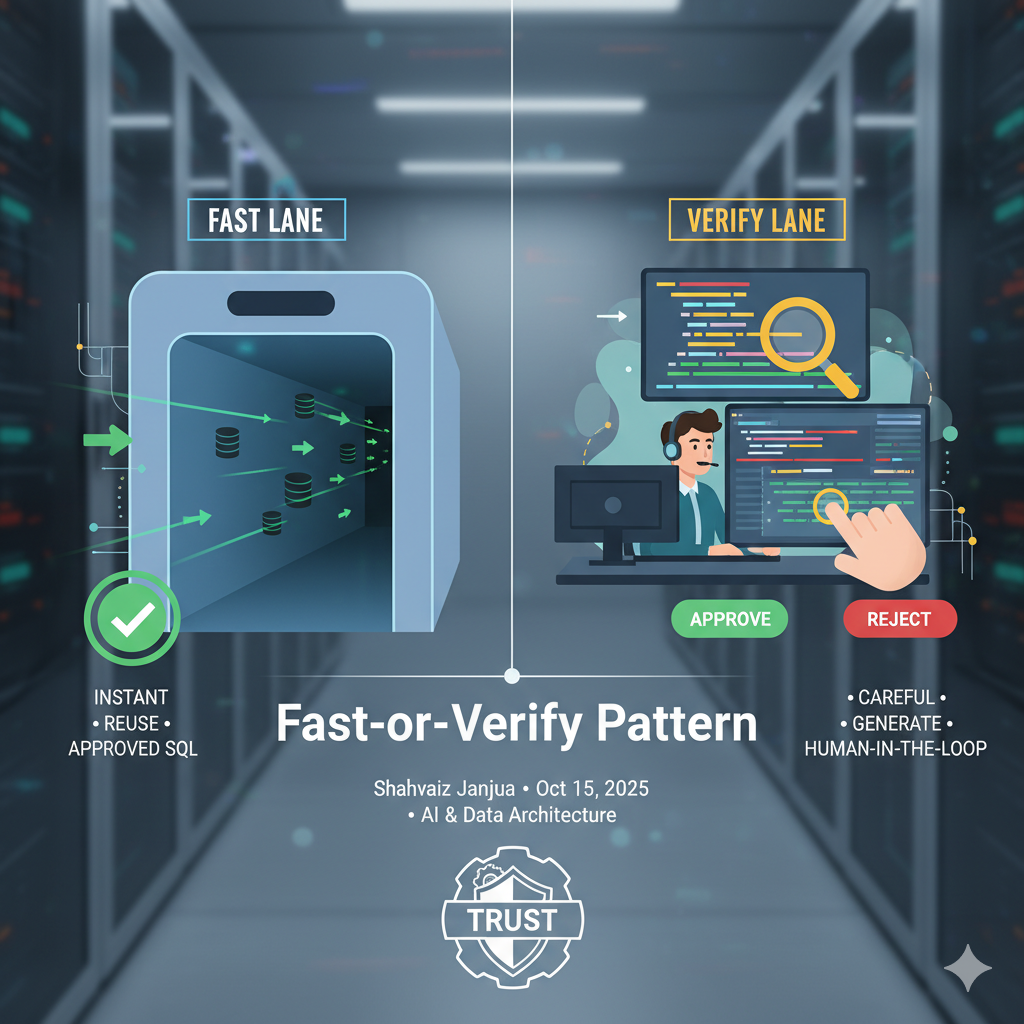

Fast-or-Verify Pattern

Most NL→SQL demos are impressive… right up to the moment you need real guarantees. In finance, healthcare, or regulated ops, “pretty good” isn’t good enough.

This article lays out a two‑lane architecture that answers known questions instantly and routes novel ones to human‑in‑the‑loop (HITL) verification and then learns from every review.

Think of it like airport security:

TSA Pre✓® for vetted passengers (fast, cheap, repeatable) and manual screening for everyone else (slower, safer, documented). Same airport, two speeds, one goal: trust!

Lane 1 - The Fast Lane (Reuse, don’t regenerate)

1) Semantic lookup inside the database. When a user asks, the agent runs a similarity search over a repository of previously approved question⇄SQL pairs stored as embeddings in Oracle Autonomous Database 23ai AI Vector Search. If it finds a true match, it reuses the human‑approved SQL - no new generation, no chance to hallucinate new SQL.

2) LLM‑as‑a‑Judge gate. A lightweight judge agent checks whether the new question is semantically identical to the validated one (e.g., cosine threshold and schema terms align). If yes → execute the approved SQL and return the answer. If not → route to the Quality Lane.

Why this works: you avoid generation entirely for common asks. Over time, your “approved SQL cache” compounds - faster answers, lower cost, higher consistency.

Lane 2 - The Quality Lane (Generate, pause, verify, learn)

For novel/ambiguous asks we generate carefully and verify before execution:

1) Clarify & improve the question (short feedback loop).

2) Generate candidate SQL using Select AI in‑database - keeping data movement minimal and auditability high. Don’t execute yet.

3) HITL checkpoint with interrupts. The agent pauses; an analyst verifies business logic, schema, joins, filters, limits and then will either Approve → execute, or Reject → the agent revises and resubmits.

4) Close the loop. Store the now‑approved question⇄SQL as an embedding for future Fast‑Lane reuse (with lineage + reviewer + timestamp).

Reality check: NL→SQL is powerful but can mis-join, mis-filter or reference non-existent columns/tables. The human checkpoint is your safety valve.

Demo repo & UI

I've published a Gradio demo that shows the main moving parts with a clean UI. What it includes today:

- LLM-as-a-Judge decisioning (the “is this semantically the same?” gate).

- Fast Lane behavior for returning pre-approved SQL when there’s a true match (no execution).

- Quality Lane (partial): up to NL→SQL generation (SQL is produced and displayed, not executed).

- Confidence label: "High/Medium/Low" classification for the generated SQL.

What it does not include:

- Human-in-the-loop review UI (approve/reject/edit) and the asynchronous pause/resume cycle.

- Automatic vectorization & storage of new question⇄SQL approvals (i.e., embedding + metadata write-back).

- SQL execution against a database.

👉 Repo: https://github.com/shjanjua/fast-or-verify-nl2sql - it’s built with Gradio so you can run and share the demo quickly without front-end heavy lifting.

Pre-reqs: Oracle Database with Select AI configured and a vector table containing approved Question, SQL & Embedded-Question for Fast-Lane lookup.

Under the Hood

- Oracle Autonomous Database 23ai – AI Vector Search: semantic lookup of approved Q⇄SQL right where your data lives. Oracle Documentation

- Select AI: in-database NL→SQL + RAG for the Quality Lane. Oracle Documentation

- OCI Generative AI: managed LLMs/embeddings for judging, rewriting and confidence scoring.

- Python + LangGraph: multi-step agent.

- Gradio: lightweight UI to expose the flow and show the SQL being produced.

Guardrails that matter

- Least privilege: read-only roles/views; block DDL/DML by policy.

- Dual thresholds: similarity and judge verdict; escalate to HITL on doubt.

- Query “unit tests” (row-count bounds, KPI validations, time windows) — not in demo.

- “Show your work” UX: SQL preview, tables touched, filter summary (the demo previews SQL only).

- Governance metadata (reviewer, version, glossary tags) — not in demo.

What to measure (once you wire in HITL + execution)

- Fast-Lane hit rate (% answered via reuse).

- Time-to-answer (p50/p95) and review latency.

- HITL approval rate and revision count.

- Escapes (post-hoc corrections) → target near-zero.

- Cost per answer vs BI baseline.

Conclusion

This pattern doesn’t replace experts, it scales them. Every human‑approved query becomes a reusable asset, turning your conversational layer into a trust machine over time